Extended Reality or XR has already proven its ability to compress time-to-competency, increase safety, and standardize skills. But nowadays the only thing everyone keeps talking about is AI. This begs the question; where does AI fit exactly into the realm of XR technology?

In this post we take a deep dive into the state of AI and XR and how together the technology is evolving from “immersive” to intelligent—turning simulations into adaptive, data-driven systems that learn from every interaction and continuously optimize workforce performance.

Early adopters of immersive learning are already seeing results — faster onboarding, zero training incidents, and higher task competency. The next frontier? AI. Stay tuned as we unpack how AI and XR are converging to reshape enterprise training and the world around us.

1) From digital training to intelligent learning

Most enterprises have climbed the e-learning curve. But slideware, video modules, and even basic 3D sims struggle to replicate the high-stakes nuance of real work. XR fixed the immersion gap; the next leap is intelligence—systems that assess, adapt, and improve autonomously.

Two forces are converging:

- XR at scale. Organizations deploy headset fleets, deliver standardized procedures, and integrate with LMSes for auditability. Platforms like Vision Portal centralize deployment, live oversight, analytics, and LMS integration—foundational plumbing for intelligence to flow through.

- AI everywhere. Executive adoption and investment surged across 2024–2025 as leaders operationalized generative AI and predictive analytics for measurable productivity impact (Deloitte).

The result is an intelligent training stack: XR captures rich performance signals, while AI transforms those signals into real-time feedback, predictive risk alerts, and personalized learning paths.

2) Why XR already works—and why AI makes it better

XR solves three chronic training failures:

- Experience deficit. New techs can’t practice rare, risky, or costly procedures enough.

- Inconsistency. Classroom delivery varies by location and trainer.

- Measurement gap. Traditional programs struggle to produce objective, granular performance data.

Case in point: Toyota Material Handling’s customized VR program improved safety compliance and knowledge retention while saving over $1.5M annually. At enterprise scale, Vision Portal adds live session streaming, remote assistance, and analytics tied to LMS records for end-to-end accountability.

AI amplifies these wins by making training adaptive (on the fly), predictive (before incidents), and continuously improving (after every session). McKinsey notes top quartile manufacturers accelerate 4IR adoption when they move from pilots to scaled, capability-driven deployments—exactly where AI + XR thrives.

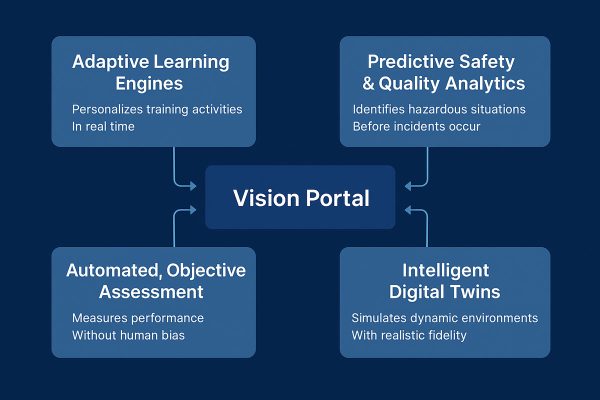

3) The AI + XR capability map

AI doesn’t just enhance XR training—it has the ability to transform it into an intelligent, adaptive ecosystem. Below are the four foundational pillars of this evolution, each driving measurable impact at enterprise scale.

A) Adaptive learning engines

What it does: Adjusts difficulty, branching, and coaching in real time as the trainee performs.

How it works: Multimodal models read interaction telemetry (gaze, time-on-step, error counts), controller traces, and voice to infer mastery, then nudge progression or remediation.

Why it matters: Trainees stay in the “challenge sweet spot,” cutting time-to-competency and cognitive overload. With Vision Portal acting as the orchestration layer (users, usage, course-level analytics), adaptive policies can be tested and rolled out at enterprise scale.

B) Predictive safety & quality analytics

What it does: Surfaces leading indicators (hesitation on hazardous steps, repeated rework, abnormal pathing) to flag at-risk techs, teams, or sites.

How it works: ML models correlate session features with downstream KPIs (incident rates, quality escapes, time-to-first-fix).

Why it matters: Training shifts from reactive (“after the incident”) to preventive (“before it happens”). WEF’s industrial metaverse blueprint emphasizes the value of persistent, data-rich digital twins for exactly these feedback loops.

C) Automated, objective assessment

What it does: Scores procedural accuracy, timing, tool use, and compliance automatically.

How it works: Computer vision, pose estimation, and NLP evaluate task steps and verbal callouts; LLMs generate rubric-aligned feedback.

Why it matters: Removes evaluator bias, standardizes certification, and frees trainers to coach higher-order reasoning. Vision Portal’s real-time streaming and step control provide the supervisory backbone.

D) Intelligent digital twins

What it does: Simulations that respond like the real world—stochastic faults, realistic physics, and human factors.

How it works: AI augments rule-based logic with learned behaviors (e.g., crane sway under wind, intermittent electrical faults).

Why it matters: “Right-first-time” readiness without risking assets or people. This is the practical edge of the industrial metaverse—operational, measurable, ROI-driven.

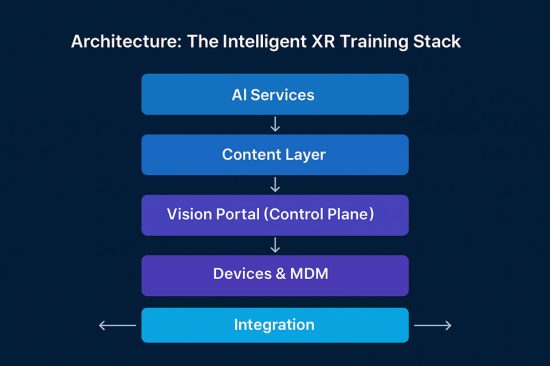

4) Architecture: the intelligent XR training stack

Here you will find a pragmatic enterprise blueprint:

- Devices & MDM. Standardize on current-gen standalone headsets (e.g., Quest 3) with enterprise device management for provisioning, updates, and access control; Meta’s enterprise programs continue to evolve in features and pricing, with third parties tracking the latest options.

- Vision Portal (Control Plane). Remote assistance (launch groups, live casting, step control, messaging), analytics (users/usage, course comparison, course-specific), and LMS/SSO integration—central to scale, governance, and data capture.

- Content Layer.

→ CGI simulations for highly interactive, tool-based procedures and multiuser scenarios (trainer-led modes).

→ Interactive 360° for contextual awareness, soft skills, and process walkthroughs. - AI Services.

→ Inference (real time): adaptive branching, automated scoring, safety prompts.

→ Analytics (batch): skill gap mapping, cohort clustering, and ROI dashboards. - Integration. LMS/LRS for credentialing and audits; IT security, SSO/MFA for identity and data governance.

5) ROI you can defend in the boardroom

Proven enterprise outcomes today

- Toyota Material Handling: increased safety compliance, improved retention, and $1.5M annual savings from VR training.

- Portfolio traction: Multi-year, seven-figure contracts across automotive, energy, and utilities; consistent YoY growth underpinned by scalable VR platform delivery.

- Platform economics: Remote assistance and analytics reduce trainer travel, minimize downtime, and compress ramp-up cycles.

The AI multiplier

- Time-to-competency: Adaptive difficulty trims dead time; predictive remediation targets exactly what each learner needs.

- Quality yield: Automated scoring and simulated edge cases catch failure modes early.

- Safety performance: Leading indicators guide proactive interventions before incidents.

- Scale: AI turns each session into a learning dataset, improving the system for everyone.

Global analyses reinforce this momentum: executive-level adoption of generative AI is translating into scaled, measurable enterprise programs (Deloitte 2025), while WEF positions the industrial metaverse—XR + AI + cloud—as a blueprint for future operations and workforce development.

💡Ready to scale your pilot? ➜

6) Building your roadmap (12–18 months)

Phase 1 — Prove value in 90 days

- Pick one high-leverage procedure (safety-critical, high cost-of-error, or training bottleneck).

- Content choice: CGI for tool/hand interactions; 360° for awareness and decision-making.

- Instrument for data: Ensure Vision Portal analytics and LMS integration are configured from day one.

- KPIs: time-to-competency, first-time-right rate, rework/incident proxies, trainer hours saved.

Phase 2 — Scale to a cohort (3–6 months)

- Multi-site rollout with standardized devices & MDM; train-the-trainer with remote assistance features to reduce classroom friction.

- Introduce AI adaptivity on the most variable steps; pilot automated scoring for objective assessments.

- Governance: SSO/MFA, data retention policies, human-in-the-loop review.

Phase 3 — Enterprise program (6–18 months)

- Predictive analytics: correlate XR signals with field metrics; implement risk dashboards for EHS and Quality.

- Content ops: modularize procedures; reuse digital twins; start a cadence for model updates.

- Change management: budget for end-user onboarding and IS training (devices, LMS, MDM, SSO).

- Continuous improvement: quarterly model review boards; align with operations excellence.

7) Governance, ethics, and change management

AI in training introduces both opportunity and responsibility:

- Fairness & transparency. Make assessment rubrics explicit; log model rationales for auditability.

- Privacy & security. Treat XR telemetry as sensitive; minimize data and restrict access by role.

- Human-in-the-loop. Trainers must supervise automated scoring and intervene when context matters.

- Workforce equity. Upskilling access must be universal. OECD highlights the urgency of adult learning systems that support AI transitions—avoid two-tier workforces.

Recent reports show a growing AI confidence gap between executives and frontline staff; investment in training is the cure, not a cost center.

Pro tip: Anchor AI uses to existing compliance frameworks (LMS records, SSO/MFA, device management), which Vision Portal already supports in enterprise deployments.

8) The intelligent trainer: what “good” looks like in 2026

- Personalized by default. Every scenario adapts to the learner’s skill profile.

- Predictive safety. Supervisors see risk alerts and recommended interventions before incidents.

- Objective certification. Automated scoring with human review compresses time-to-credential while improving trust.

- Operational integration. Training outputs flow into production KPIs; leaders manage training like a revenue-impacting process, not a cost.

- Device and data discipline. Enterprise MDM and identity govern the fleet; audits are push-button tasks.

- Industrial metaverse ready. Digital twins unify simulation, analytics, and knowledge capture across the asset lifecycle (WEF)

9) Design patterns you can steal

- Adaptive Remediation Loop

- Detect hesitation or repeated errors on steps.

- Branch to a micro-lesson (annotated exploded view, slowed timing, ghost toolpath).

- Return to scenario with slight variation to ensure transfer.

- Log time-to-recovery and error delta to the analytics layer.

- Predictive EHS Dashboard

- Inputs: session heatmaps, out-of-sequence actions, rushed timings.

- Model: weekly rolling model per site and team.

- Output: “Top 5 risk factors” and “Top 10 trainees to coach this week.”

- Owner: EHS with Training Ops; review cadence biweekly.

- Objective Multiuser Evaluation

- Use trainer-led multiplayer mode to simulate team procedures; LLM scores communication clarity, handoff timing, and adherence to PPE protocols; Vision Portal captures all artifacts and pushes results to LMS.

10) Budgeting and resourcing, realistically

A practical mix observed across enterprise programs:

- One-time (per module): Digital twin creation + interaction design for CGI simulations; or 360° production for context training.

- Platform & Support (annual): Vision Portal (remote assistance, analytics), maintenance & support for modules, and MDM/device services.

- People: Instructional design, 3D/Unity dev, data/AI enablement, and change management trainers.

The macro case for investment is strong: corporate training spend is projected in the hundreds of billions and XR’s enterprise slice is accelerating. Pull forward ROI by prioritizing procedures with high incident cost or high variability. For more information on VR training costs, check out our detailed guide.

11) Branding and UX considerations (they matter)

Adoption hinges on trust and usability. Follow brand-consistent typography (Inter/Open Sans) and use a clean interface hierarchy aligned with your corporate design system to reduce cognitive load and boost trainer confidence. In practice:

- Clear, consistent menuing and login experience across modules.

- Visual, audio, and haptic feedback for step confirmation and error states.

- Scalable UI that works for novice and expert operator profiles.

Conclusion

The last decade digitized training. The next decade intelligizes it. XR gives employees realistic practice at near-zero risk; AI makes that practice tailored, predictive, and provably effective. Together, they form a reinforcing loop where every session trains the system—and the system trains everyone better.

Enterprises that adopt AI + XR won’t just train faster; they’ll operate smarter. If you’re ready to move from pilots to an intelligent training program with measurable impact on safety, quality, and productivity, the blueprint is here—and the technology is ready.

References & Further Reading

VR Vision resources

- VR Vision Case Study: Toyota Material Handling

- XR Training Solutions for Enterprise

- Vision Portal Overview

External insights

- Deloitte, State of Generative AI in the Enterprise 2024 (Q4); GenAI and the Future Enterprise

- World Economic Forum, Navigating the Industrial Metaverse: A Blueprint for Future Innovations (Mar 2024)

- McKinsey, Adopting AI at Speed and Scale in Manufacturing

- OECD, Training Supply for the Green and AI Transitions; AI, Education & Skills

- Meta Quest for Business / Enterprise device management updates (coverage & analysis)

- Dayforce survey on AI upskilling gap (context for workforce equity)